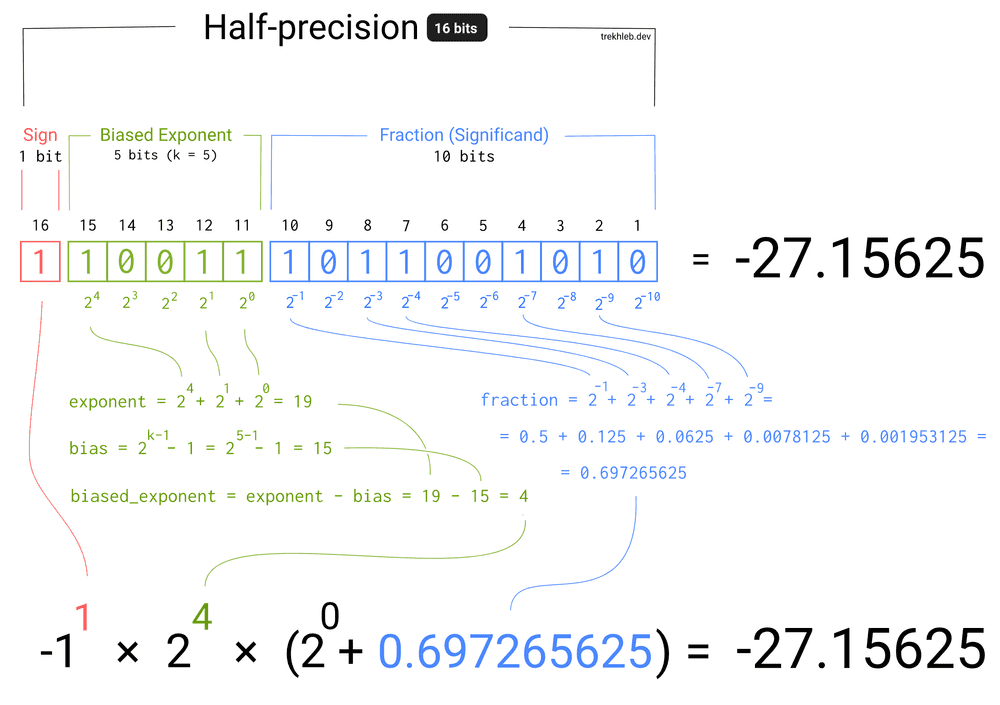

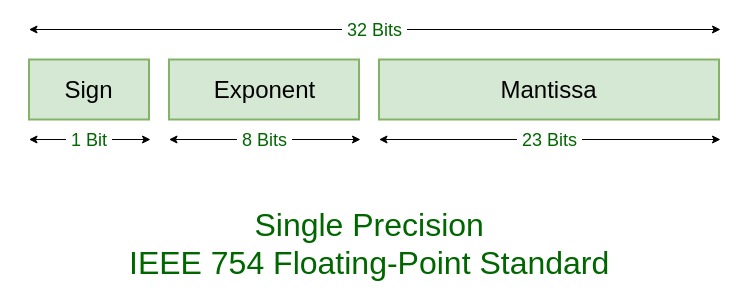

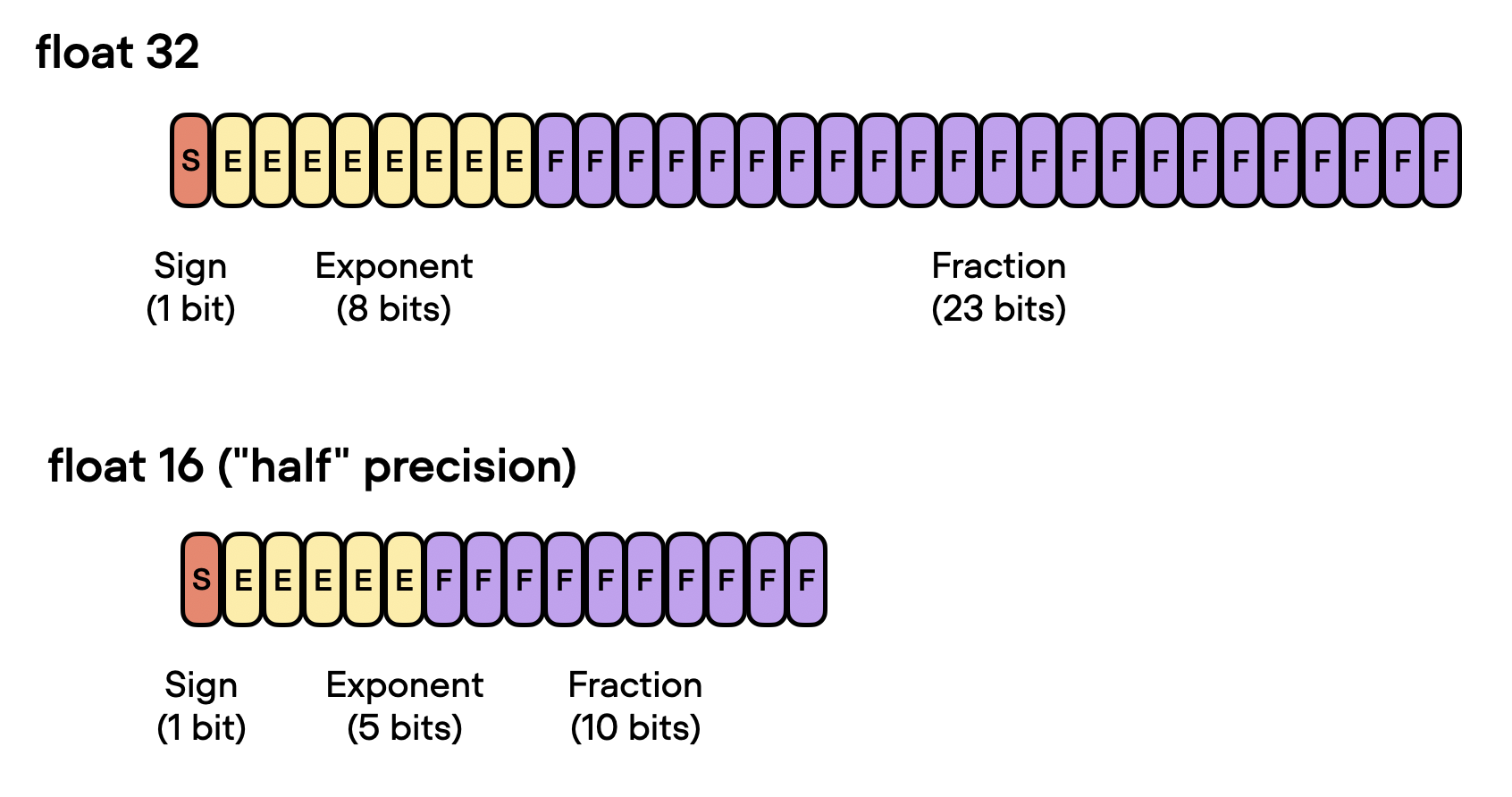

16, 8, and 4-bit Floating Point Formats — How Does it Work? | by Dmitrii Eliuseev | Sep, 2023 | Towards Data Science

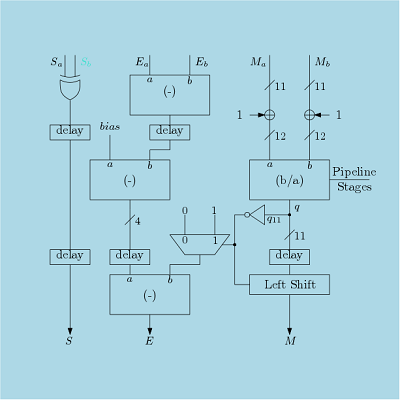

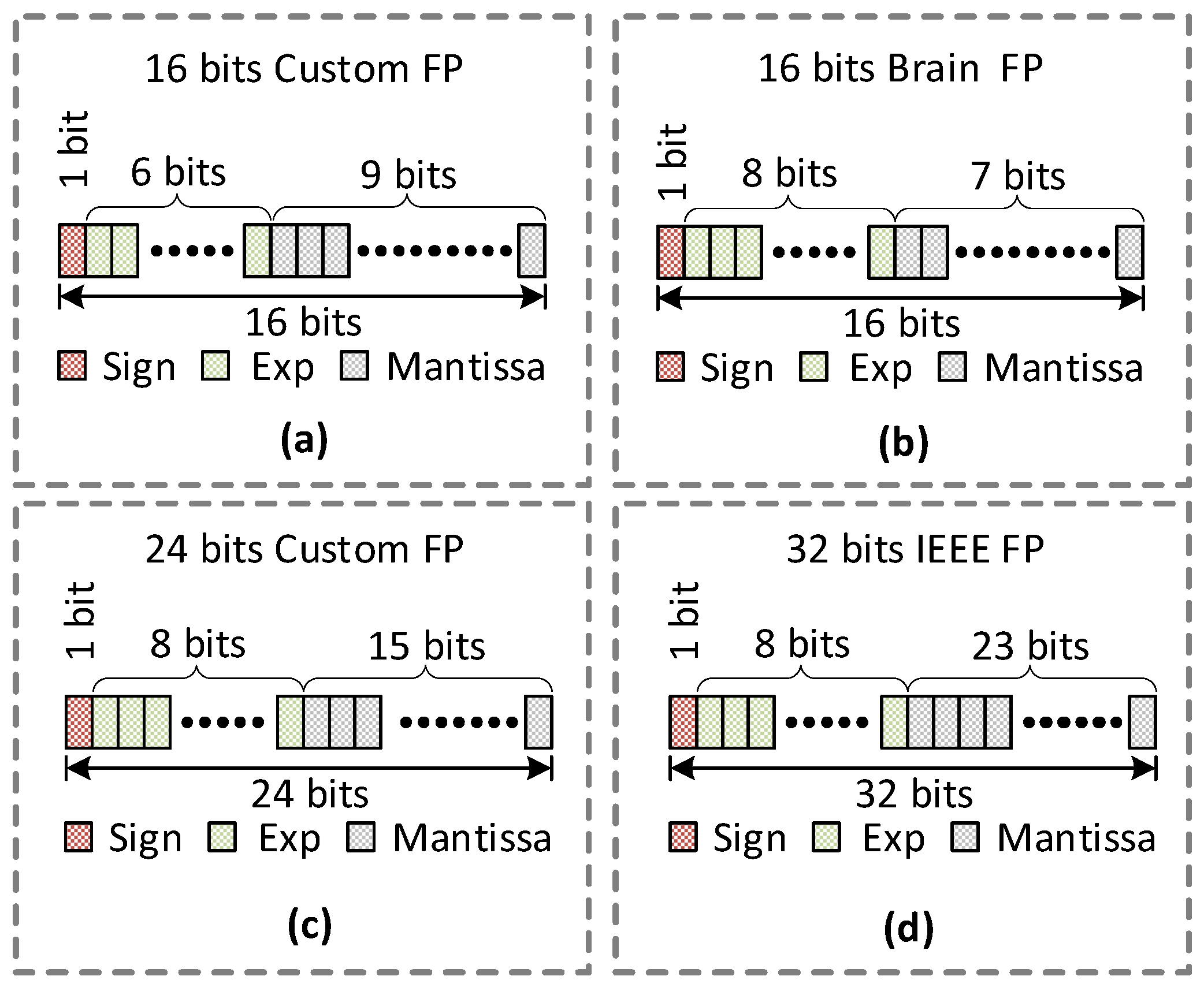

Sensors | Free Full-Text | Optimal Architecture of Floating-Point Arithmetic for Neural Network Training Processors

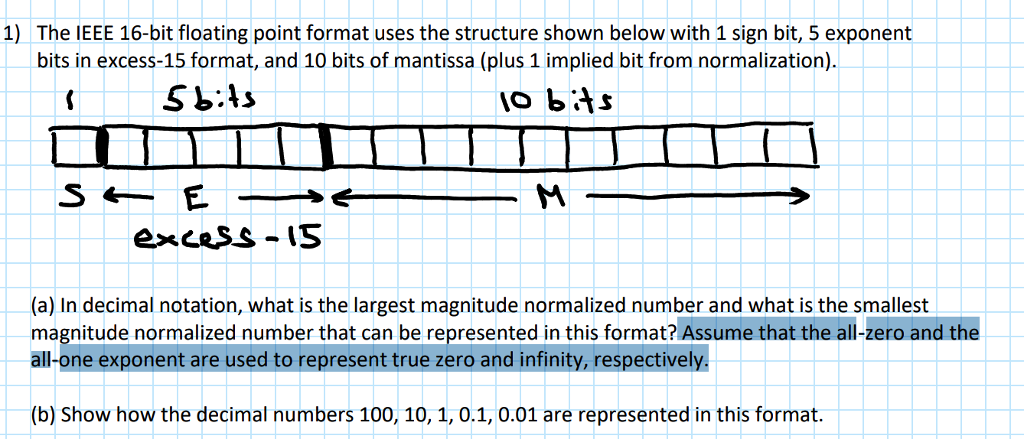

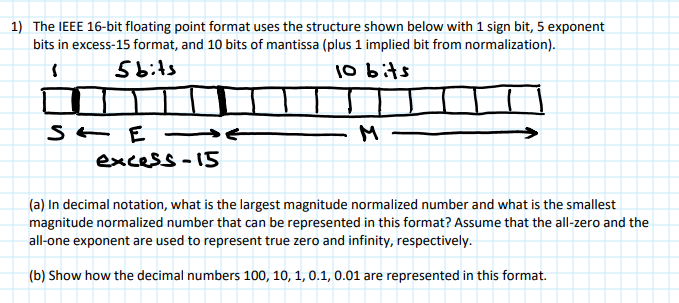

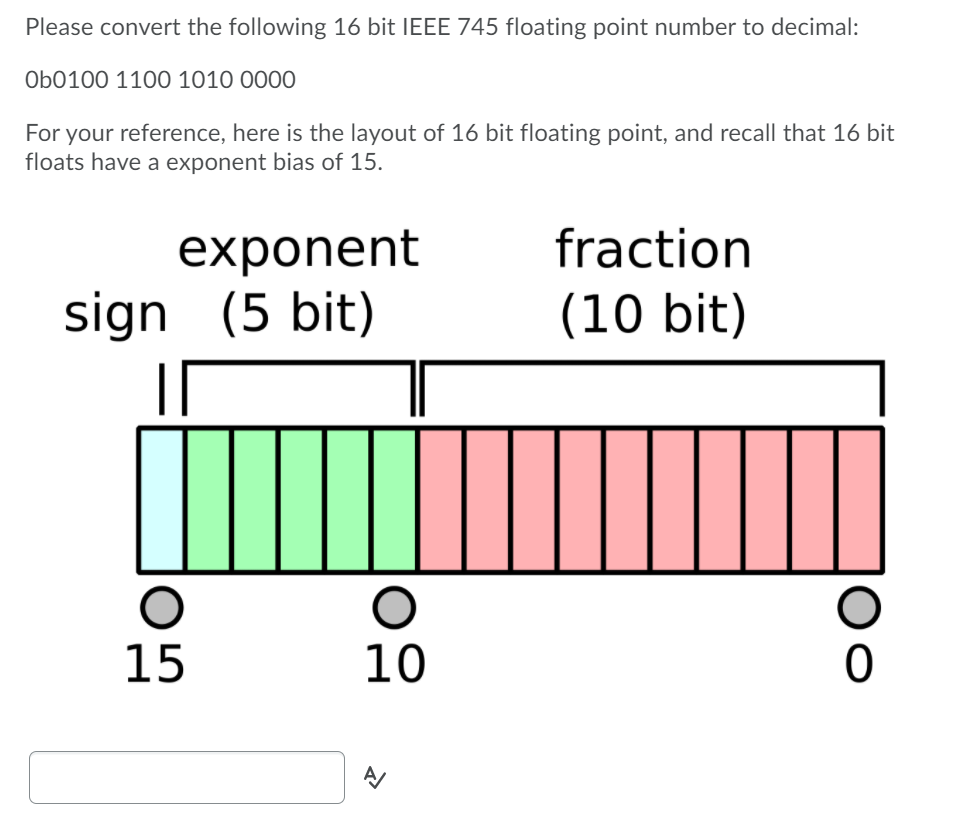

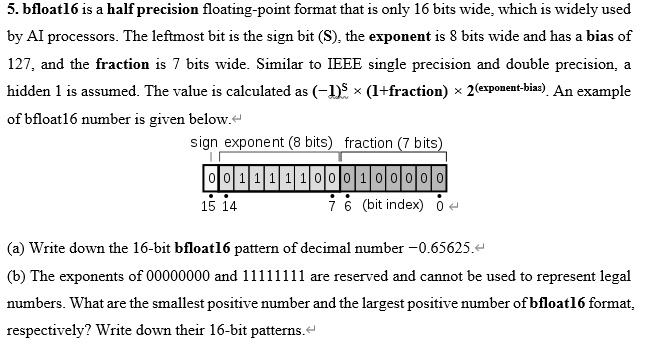

SOLVED: 5. bfloat16 is a half precision floating-point format that is only 16 bits wide, which is widely used by AI processors. The leftmost bit is the sign bit (S), the exponent