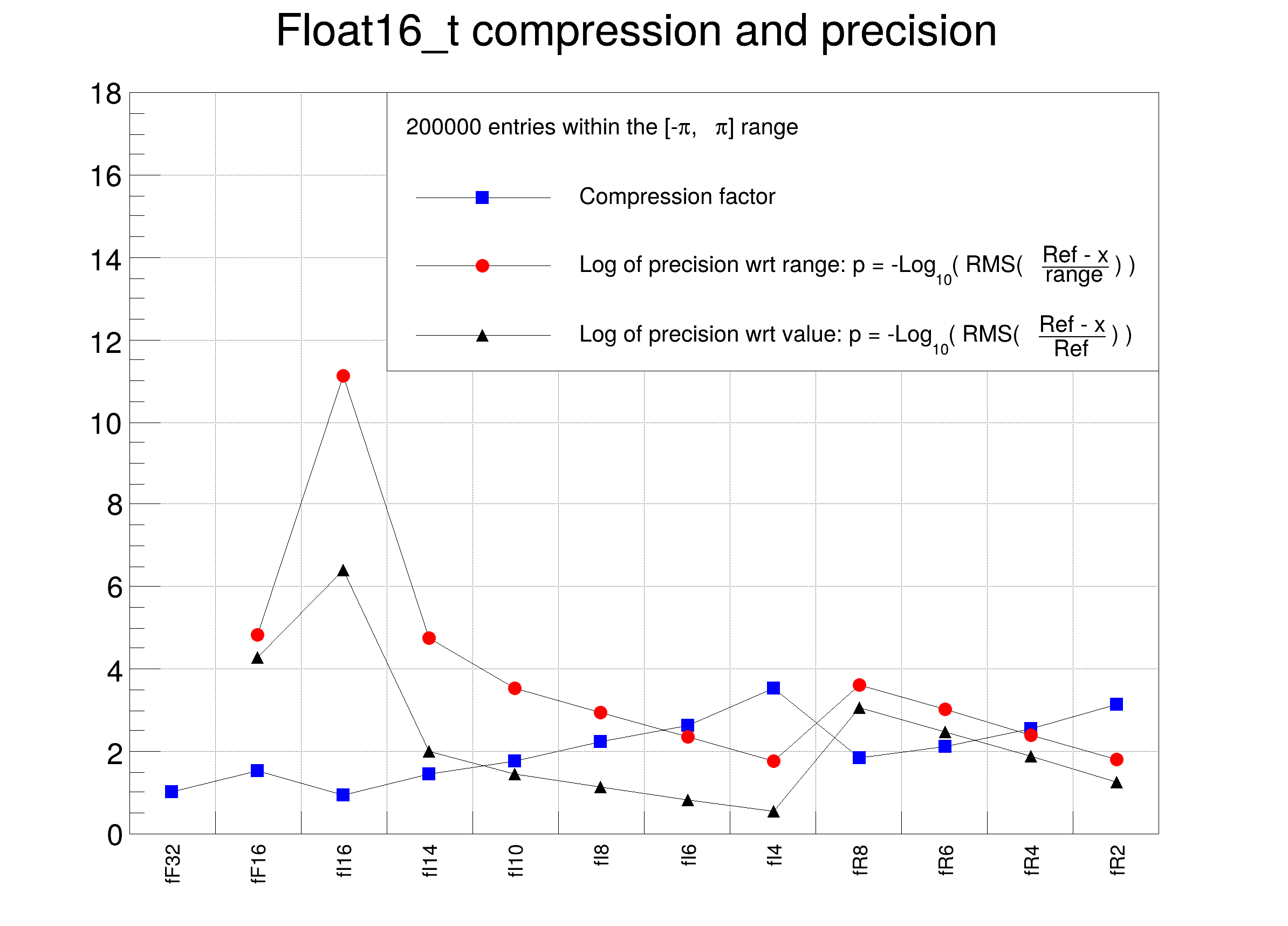

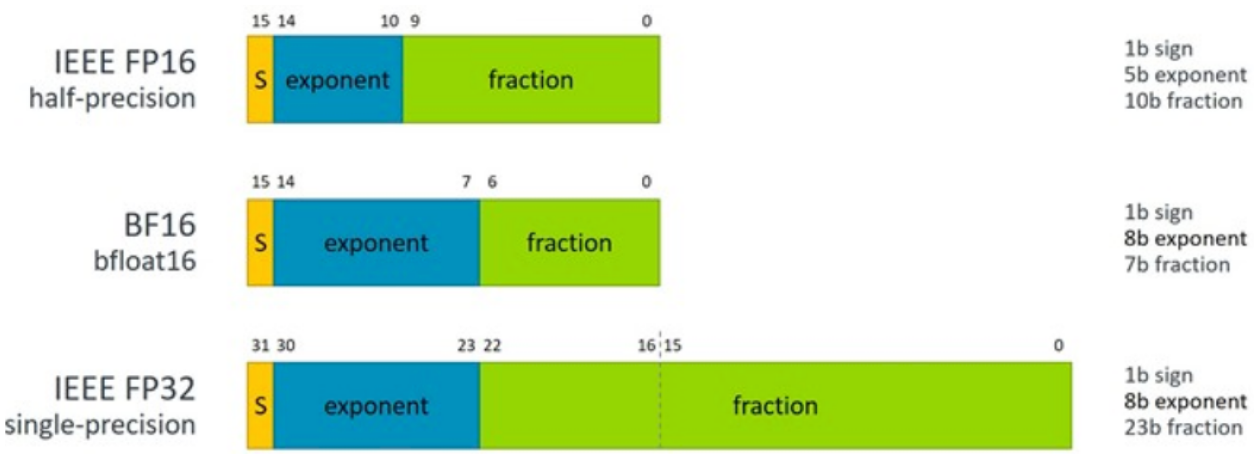

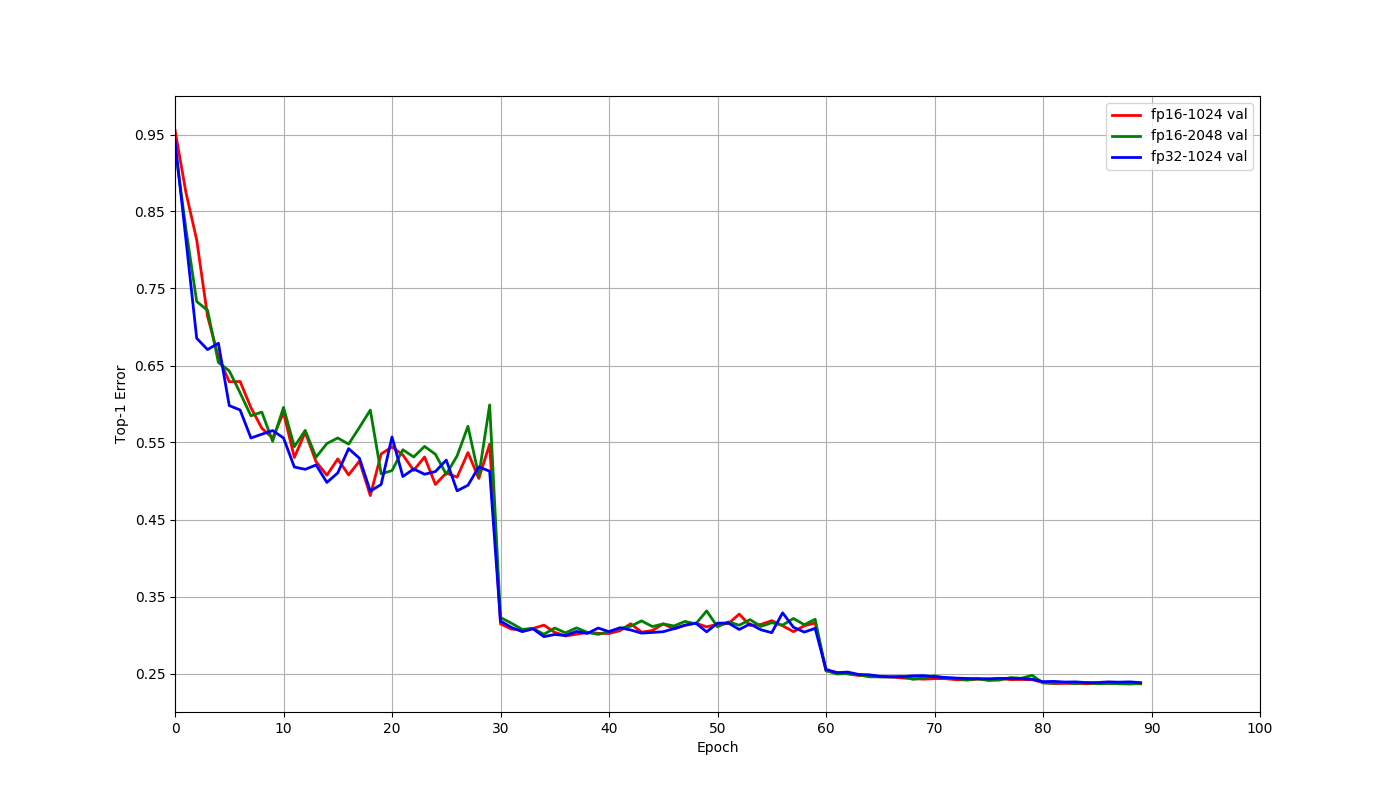

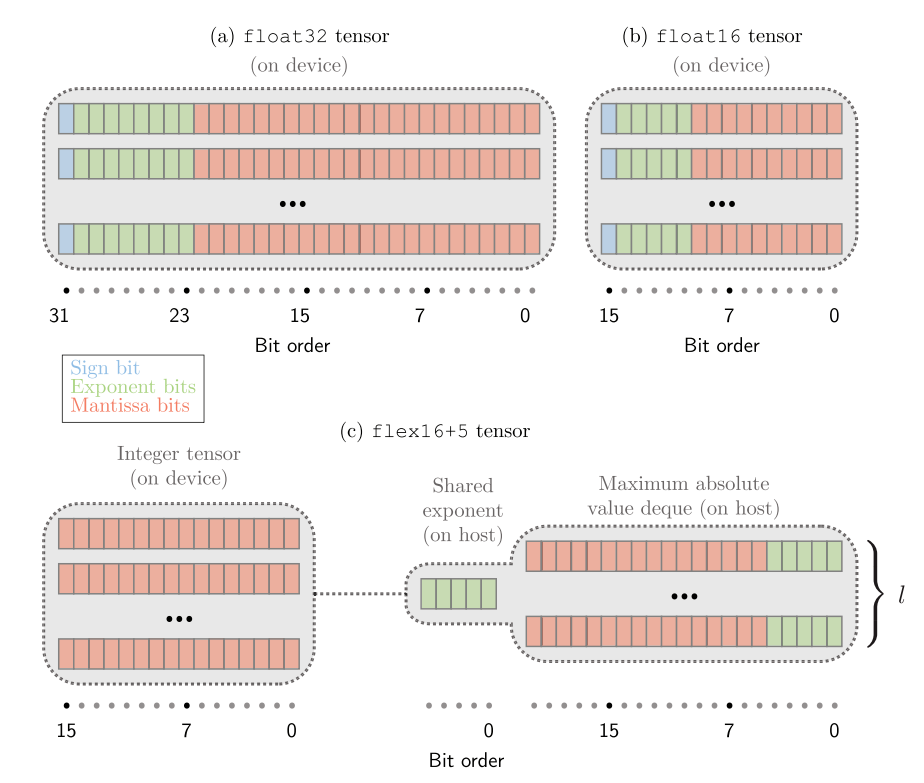

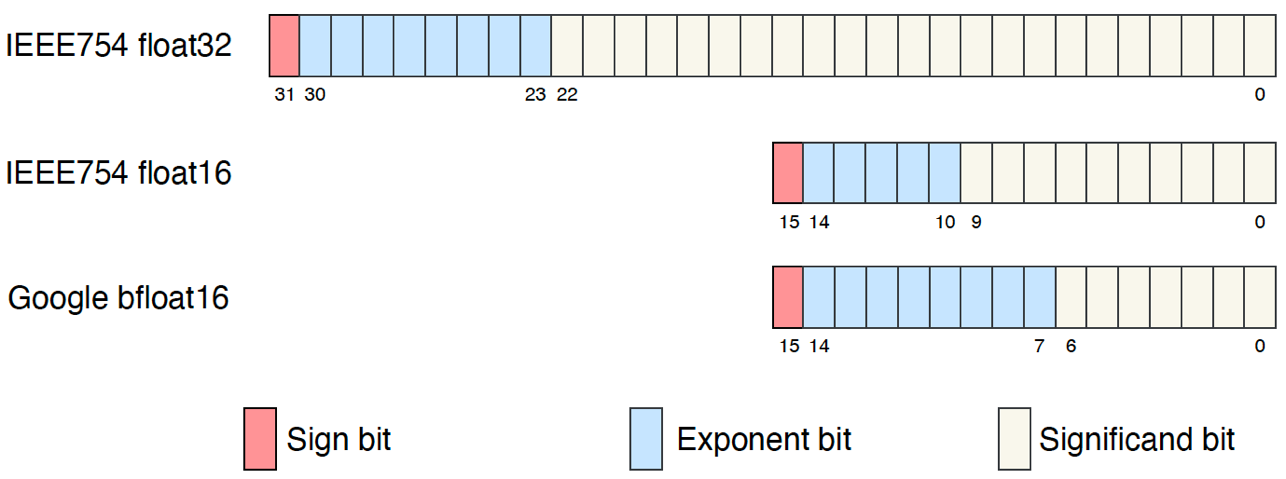

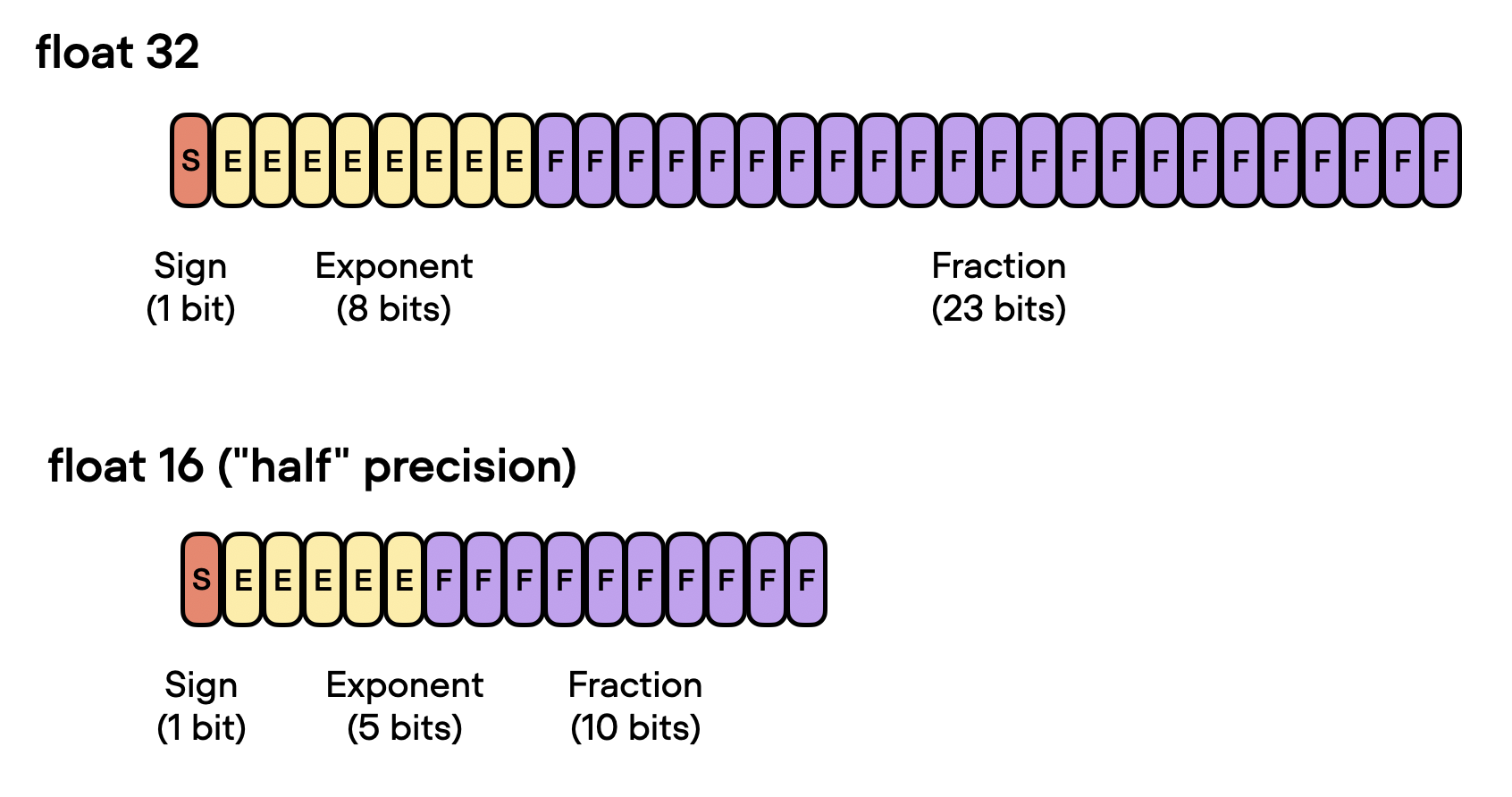

Comparison of the float32, bfloat16, and float16 numerical formats. The... | Download Scientific Diagram

GitHub - acgessler/half_float: C++ implementation of a 16 bit floating-point type mimicking most of the IEEE 754 behaviour. Compatible with the half data type used as texture format by OpenGl/Direct3D.

GitHub - x448/float16: float16 provides IEEE 754 half-precision format (binary16) with correct conversions to/from float32

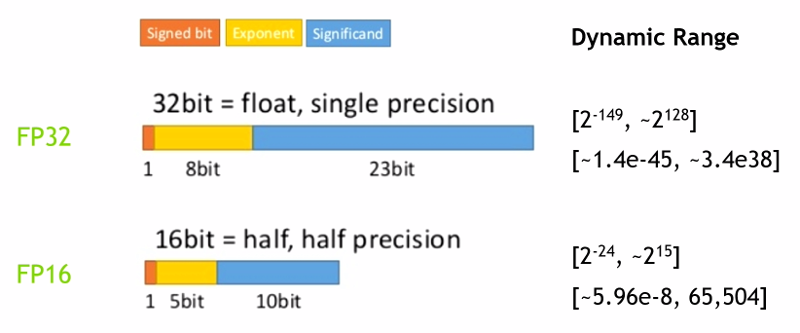

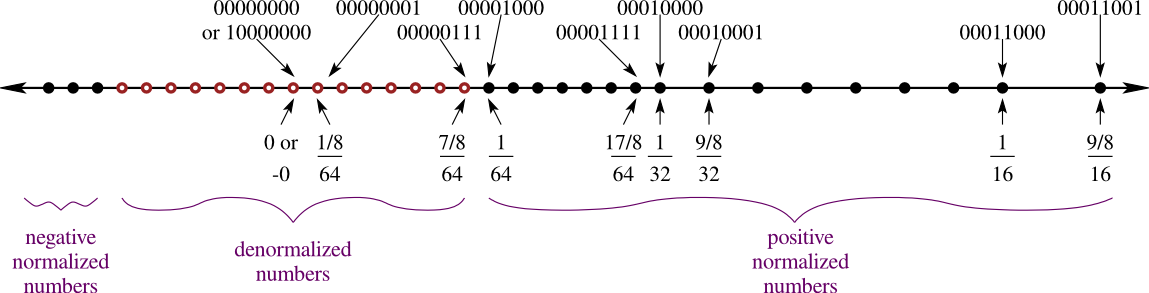

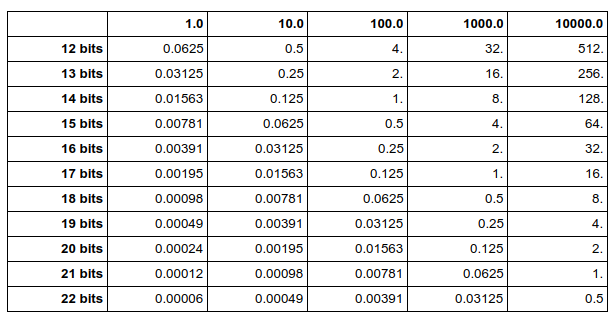

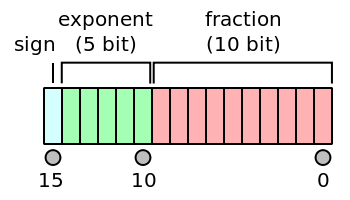

Half Precision” 16-bit Floating Point Arithmetic » Cleve's Corner: Cleve Moler on Mathematics and Computing - MATLAB & Simulink

TensorFlow Model Optimization Toolkit — float16 quantization halves model size — The TensorFlow Blog

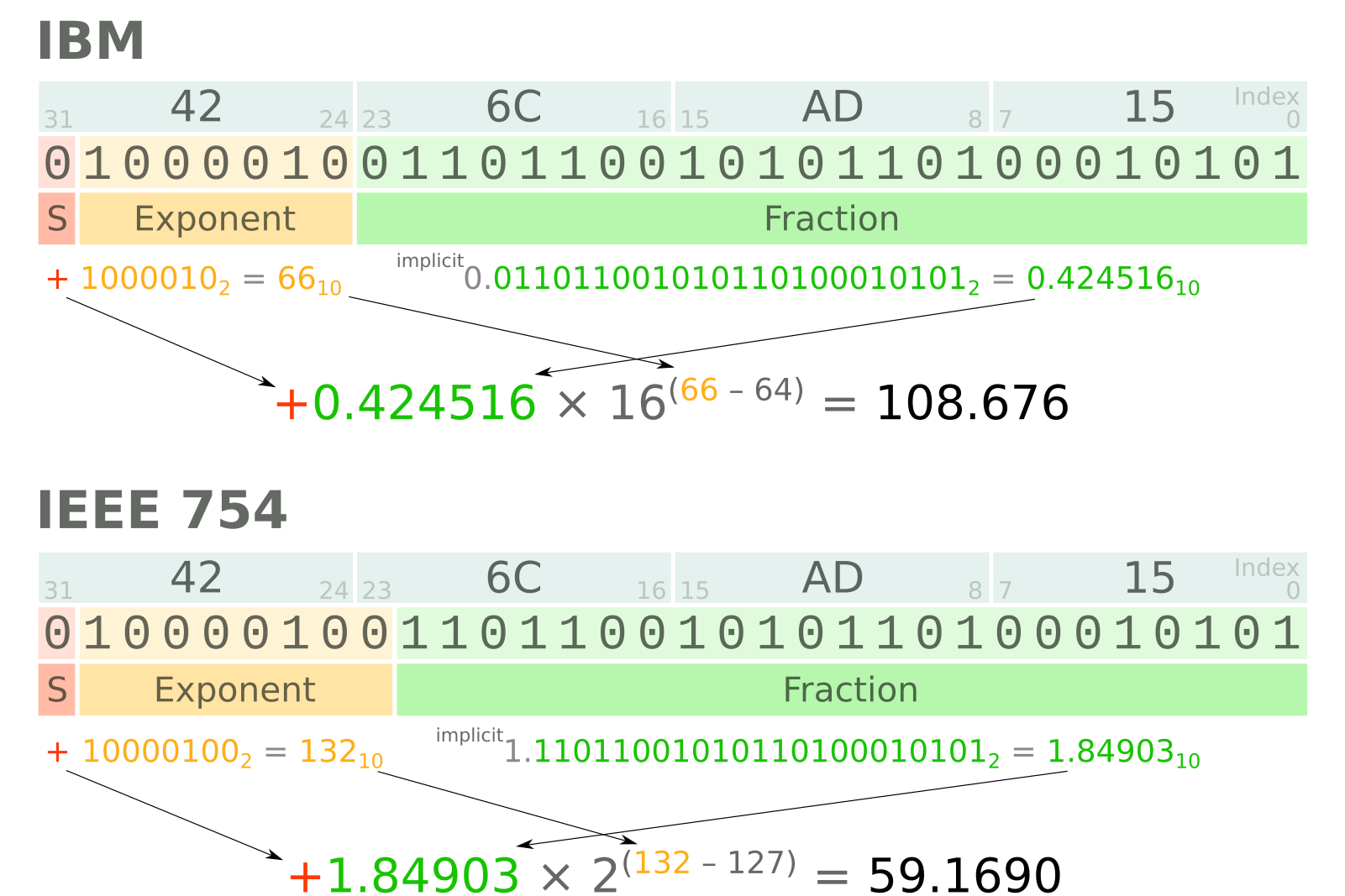

Contrast between IEEE 754 Single-precision 32-bit floating-point format... | Download Scientific Diagram

MARSHALLTOWN Resin Hand Float, 16 x 3-1/2 Inch, DuraSoft Handle, Laminated Canvas Resin, Concrete Tool, Easily Works Color Hardeners into Concrete, Square End, Concrete Tools, Made in USA, 4526D - Masonry Floats - Amazon.com

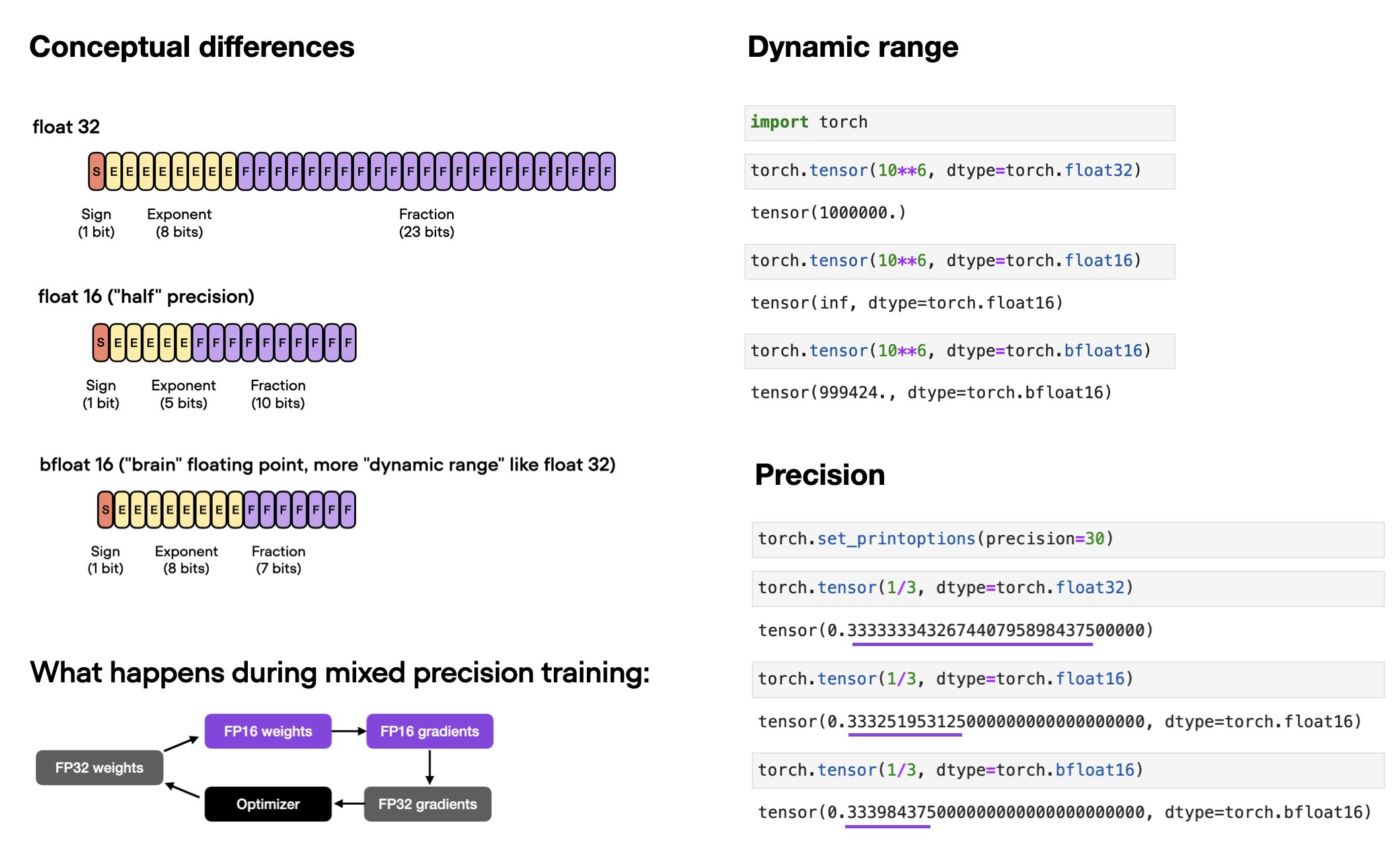

Sebastian Raschka on Twitter: "When using automatic mixed-precision training to accelerate model training, there are two common options: float16 and bfloat16 (16-bit "brain" floating points). What's the difference? Compared to float16, bfloat16