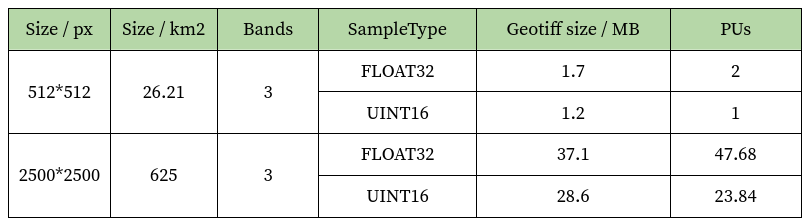

GitHub - purescript-contrib/purescript-float32: Float32, single-precision 32-bit floating-point number type.

GitHub - stdlib-js/number-float32-base-to-word: Return an unsigned 32-bit integer corresponding to the IEEE 754 binary representation of a single-precision floating-point number.

Multiple precision results in Float32, Float64, and BigFloat128 on (14). | Download Scientific Diagram

Multiple precision results in Float32, Float64, and BigFloat128 on (14). | Download Scientific Diagram

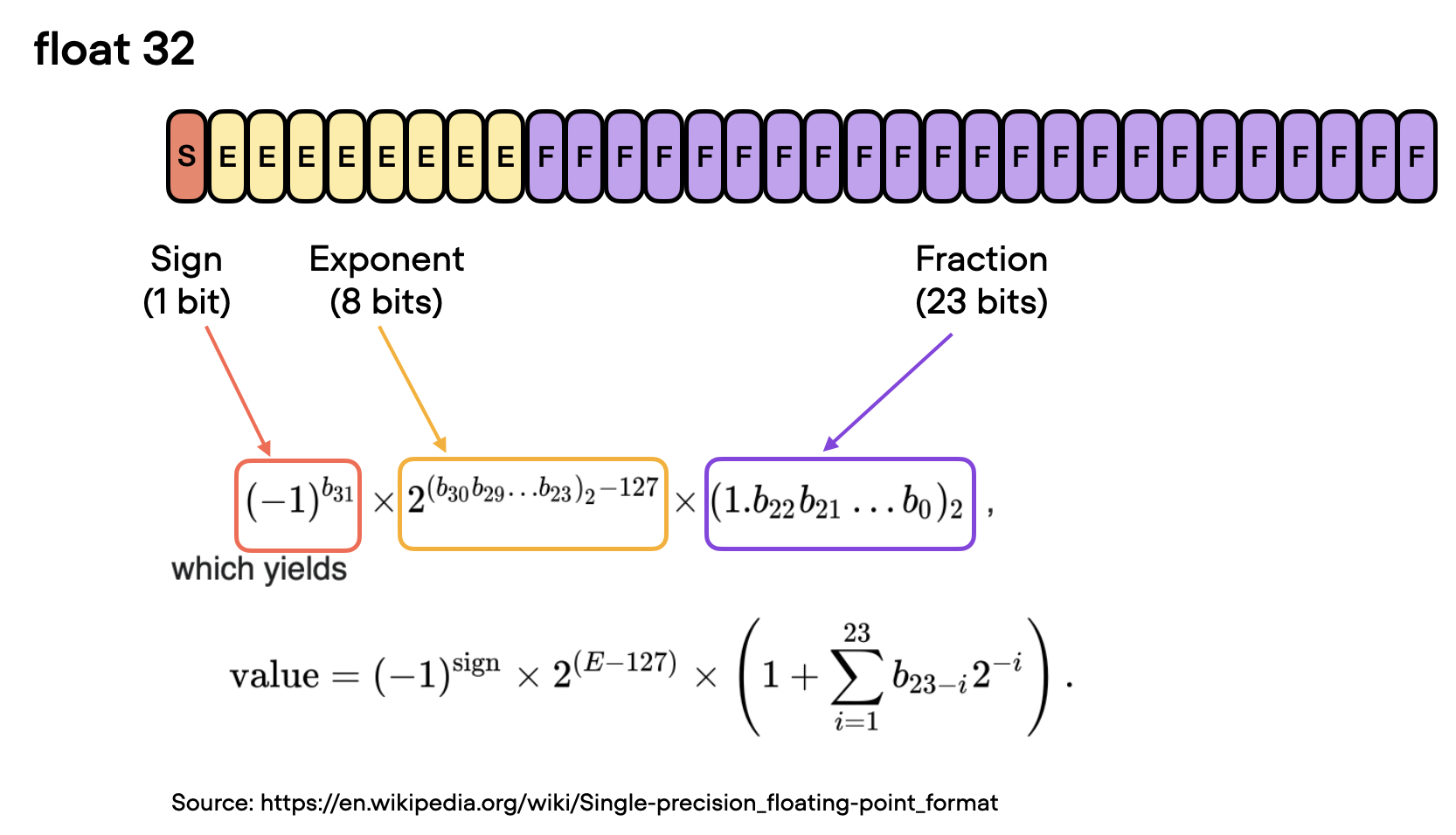

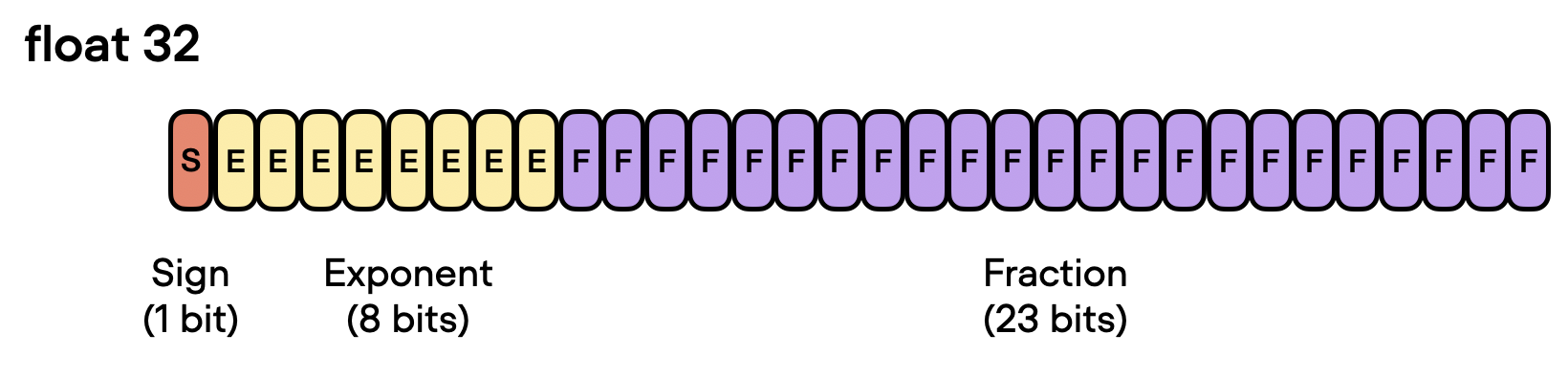

Understanding 32-Bit Floating Point Number Representation (binary32 format) - Education and Teaching - Arduino Forum

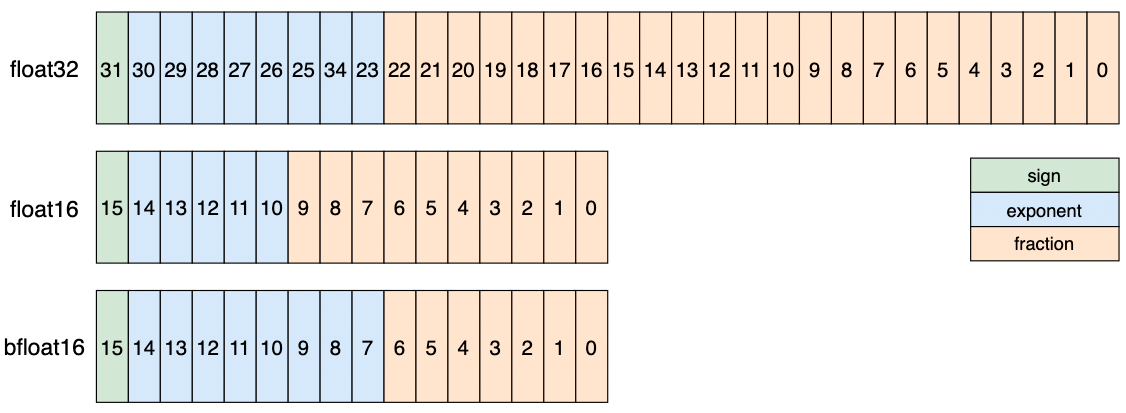

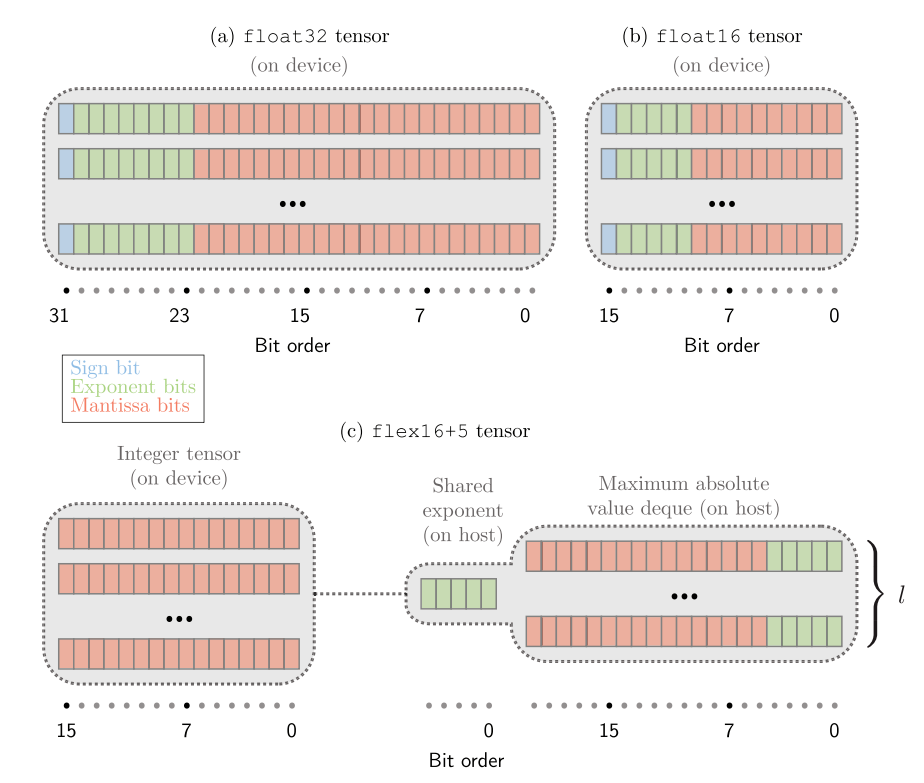

Comparison of the float32, bfloat16, and float16 numerical formats. The... | Download Scientific Diagram